Welcome to the Era of Digital Therapy

Feeling anxious? Overwhelmed? Burnt out?Don’t worry, an AI is here to help.

Yes, really!

From Wysa to Woebot, mental health chatbots are offering 24/7 emotional support. They chat, comfort, give advice, and even check in on you daily. What used to require a therapist’s couch now lives in your phone.

But here’s the real question we need to ask:

Can we or should we trust artificial intelligence with our deepest emotional wounds?

Or are we unknowingly replacing "healing" with "hollow comfort"?

What Is an AI Therapist, anyway?

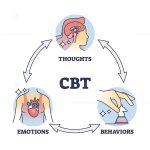

AI therapy bots are trained on techniques like CBT (Cognitive Behavioral Therapy) and positive psychology. They analyze your text messages and respond with comforting, pre-programmed insights.They can say things like:

On the surface, it feels human. But peel back the code and you’ll realize — there’s no one on the other side.“You’re doing your best, and that’s enough.”

“Let’s try a breathing exercise together.”

Why People Are Turning to AI Therapy

There’s a reason these apps have millions of users worldwide:- Instant access: No waiting for appointments. Your "therapist" is available at 2 a.m.

- Affordable or free: Unlike ₹1000–₹3000 therapy sessions.

- No judgment: You can say anything, and it won’t flinch.

- Great for daily check-ins: It keeps you emotionally aware, even if you're not in crisis.

But Here's the Darker Side

Now let’s talk about the uncomfortable part.- Lack of real empathy: AI doesn’t truly care — it simulates caring.

- No real-time crisis handling: Suicidal thoughts or trauma can't be safely handled by a bot.

- Risk of emotional dependence: Some users start forming real emotional bonds with these bots — even feeling heartbreak when they glitch or go offline.

- Data privacy: You’re pouring your emotions into a server. Who owns your pain?

The Bigger Ethical Question

The real danger isn’t that AI will fail us.It’s that it might succeed just enough to convince us we don’t need human support anymore.

It’s easy to feel seen and heard when a bot gives you the right words, but the depth and nuance of healing often come from messy, unpredictable, human interactions.

We may be walking into a future where we trade authentic therapy for emotionally intelligent code — and don’t even realize what we’ve lost.

So… What’s the Answer?

Let’s be clear:AI can help — especially for people who don’t have access to traditional therapy or who want a daily mental health check-in. It’s a tool. A starting point. A bridge.

But it’s not a replacement for what a human therapist provides:

- Emotional safety

- Nonverbal understanding

- Moral, cultural, and situational context

- Real-time decision-making

What Do YOU Think?

- Would you confide your emotions to a machine?

- Have you ever tried AI therapy? Did it help you or make you feel lonelier?

- Should governments regulate AI in mental health?

Let’s have a real conversation — human to human.